irc-disentanglement

This repository contains data and code for disentangling conversations on IRC, as described in the following two papers:

- A Large-Scale Corpus for Conversation Disentanglement, Jonathan K. Kummerfeld, Sai R. Gouravajhala, Joseph Peper, Vignesh Athreya, Chulaka Gunasekara, Jatin Ganhotra, Siva Sankalp Patel, Lazaros Polymenakos, and Walter S. Lasecki, ACL 2019

- Chat Disentanglement: Data for New Domains and Methods for More Accurate Annotation, Sai R. Gouravajhala, Andrew M. Vernier, Yiming Shi, Zihan Li, Mark Ackerman, Jonathan K. Kummerfeld

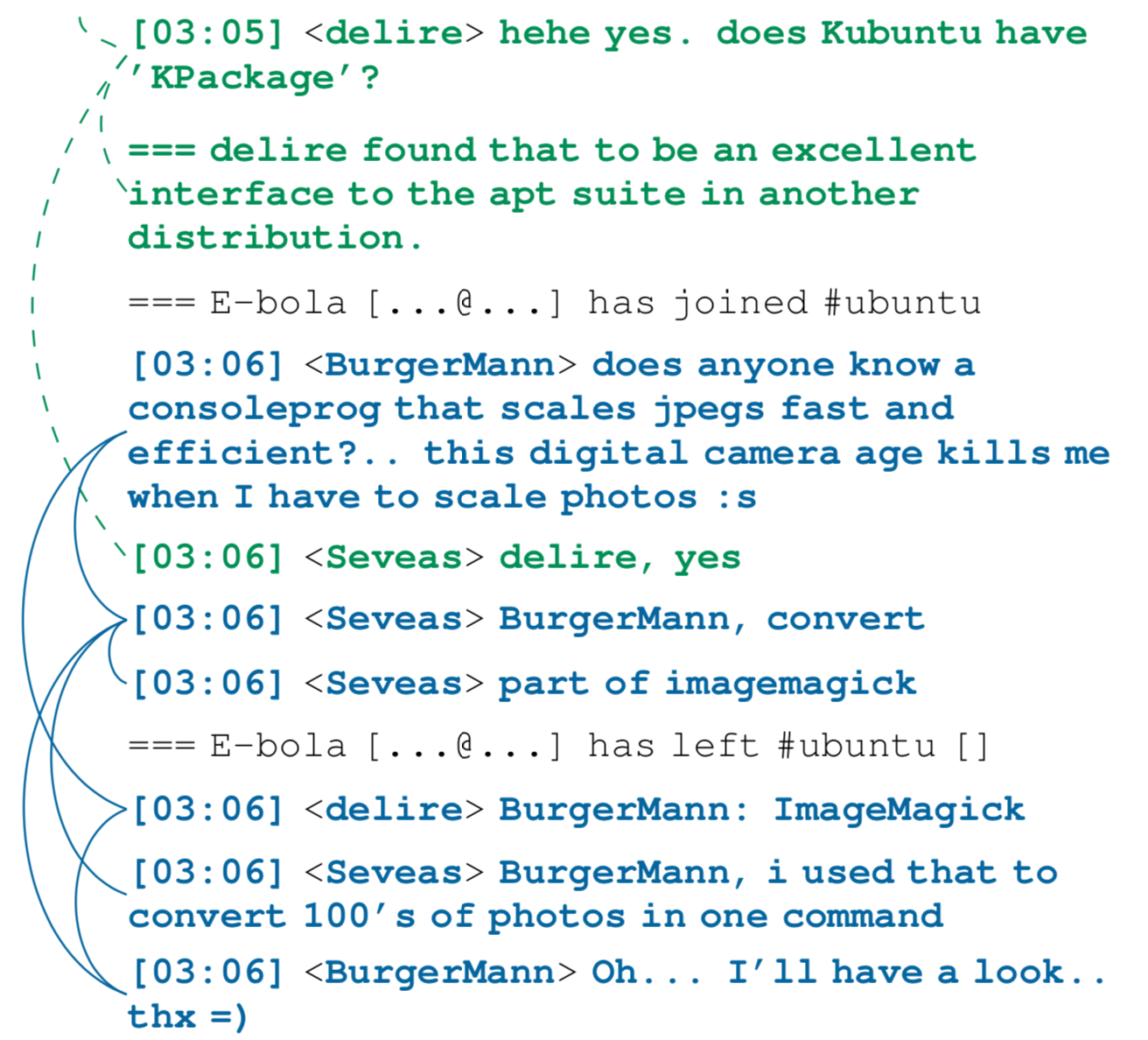

Conversation disentanglement is the task of identifying separate conversations in a single stream of messages. For example, the image below shows two entangled conversations and an annotated graph structure (indicated by lines and colours). The example includes a message that receives multiple responses, when multiple people independently help BurgerMann, and the inverse, when the last message responds to multiple messages. We also see two of the users, delire and Seveas, simultaneously participating in two conversations.

The 2019 paper:

- Introduces a new dataset, with disentanglement for 77,563 messages of IRC.

- Introduces a new model, which achieves significantly higher results than prior work.

- Re-analyses prior work, identifying issues with data and assumptions in models.

The 2023 paper:

- Introduces a multi-domain dataset, with enough annotated data for evaluation.

- Studies annotation methods, showing that guidance can improve accuracy of non-expert annotation, but crowd annotation remains a challenge.

To get our code and data, download this repository in one of these ways:

- Download .zip

- Download .tar.gz

git clone https://github.com/jkkummerfeld/irc-disentanglement.git

The data is also available here:

This repository contains:

- The annotated data for Ubuntu, Channel Two (2019 paper), and four new channels (2023 paper).

- The code for our model.

- The code for tools that do evaluation, preprocessing and data format conversion.

- A collection of 496,469 automatically disentangled conversations from 2004 to 2019 in a bzip2 file.

If you use the data or code in your work, please cite our work as:

@InProceedings{acl19disentangle,

author = {Jonathan K. Kummerfeld and Sai R. Gouravajhala and Joseph Peper and Vignesh Athreya and Chulaka Gunasekara and Jatin Ganhotra and Siva Sankalp Patel and Lazaros Polymenakos and Walter S. Lasecki},

title = {A Large-Scale Corpus for Conversation Disentanglement},

booktitle = {Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics},

location = {Florence, Italy},

month = {July},

year = {2019},

doi = {10.18653/v1/P19-1374},

pages = {3846--3856},

url = {https://aclweb.org/anthology/papers/P/P19/P19-1374/},

arxiv = {https://arxiv.org/abs/1810.11118},

software = {https://www.jkk.name/irc-disentanglement},

data = {https://www.jkk.name/irc-disentanglement},

}

@InProceedings{alta23disentangle,

author = {Sai R. Gouravajhala and Andrew M. Vernier and Yiming Shi and Zihan Li and Mark Ackerman and Jonathan K. Kummerfeld},

title = {Chat Disentanglement: Data for New Domains and Methods for More Accurate Annotation},

booktitle = {Proceedings of the The 21st Annual Workshop of the Australasian Language Technology Association},

location = {Melbourne, Australia},

month = {November},

year = {2023},

doi = {},

pages = {},

url = {},

arxiv = {},

data = {https://www.jkk.name/irc-disentanglement},

}

Running and Reproducing Results

See the src folder README for detailed instructions on running the system. Additional evaluation script information can be found in the tools README.

Updates

- The description of the voting ensemble in the paper has a mistake. When not all models agree, the most agreed upon link is chosen (ties are broken by choosing the shorter link).

Questions

If you have a question please either:

- Open an issue on github.

- Mail me at jkummerf@umich.edu.

Contributions

If you find a bug in the data or code, please submit an issue, or even better, a pull request with a fix. I will be merging fixes into a development branch and only infrequently merging all of those changes into the master branch (at which point this page will be adjusted to note that it is a new release). This approach is intended to balance the need for clear comparisons between systems, while also improving the data.

Acknowledgments

The material from the 2019 paper is based in part upon work supported by IBM under contract 4915012629. The material from the 2023 paper is based in part upon work supported by DARPA (grant #D19AP00079), and the ARC (DECRA grant). Any opinions, findings, conclusions or recommendations expressed are those of the authors and do not necessarily reflect the views of these other organisations.